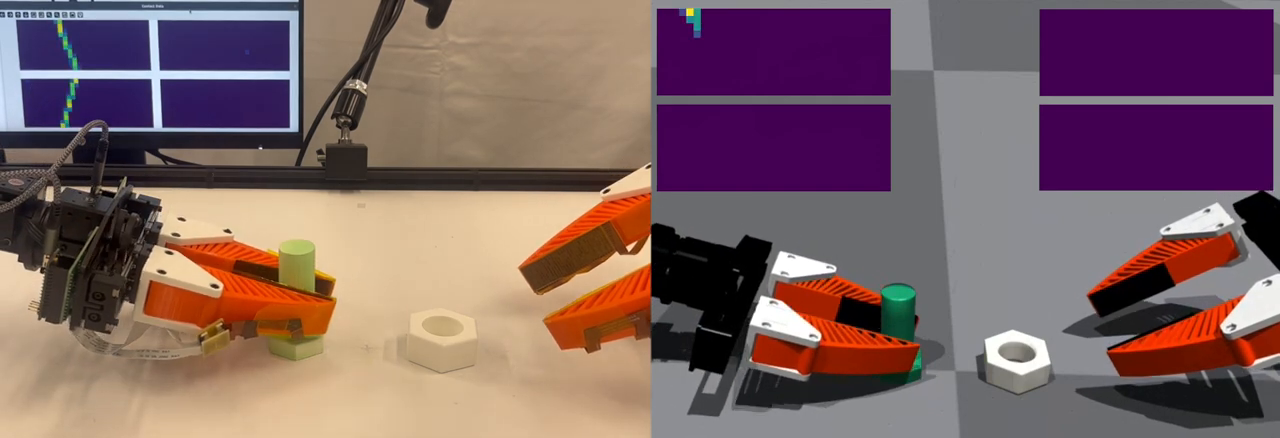

- September, 2025: VT-Refine (bimanual assembly with visuo-tactile feedback) and Dexplore (scalable neural control for dexterous manipulation) are accepted to CoRL 2025.

- Jan, 2025: Our Scene Synthesizer paper is published in JOSS and the code is now publicly available.

- May, 2024: Our FoundationPose paper is accepted to CVPR 2024.

- April 1st, 2023: One paper is accepted to CVPR 2023.

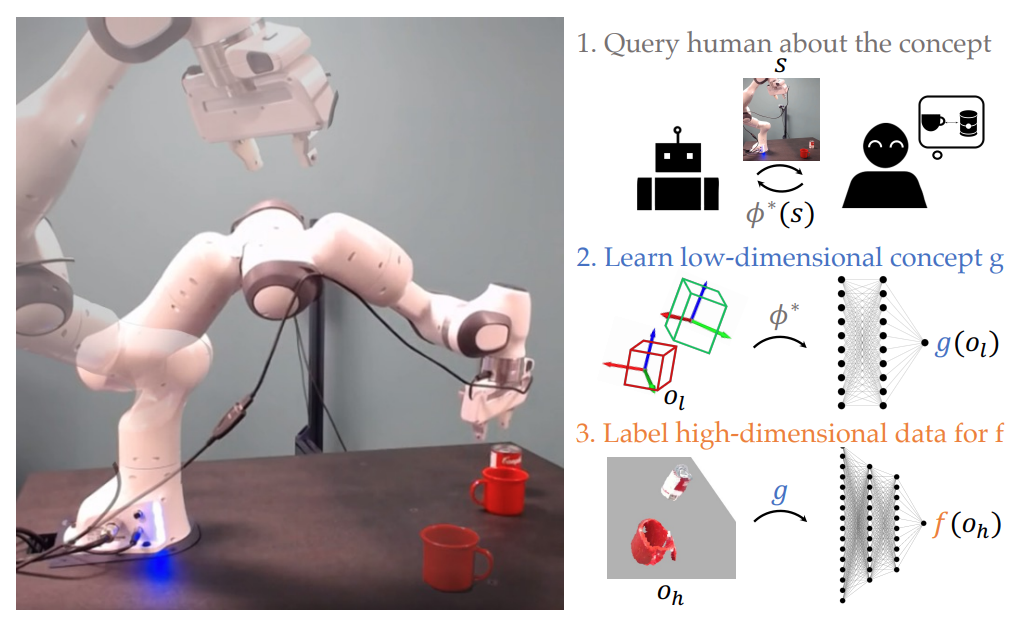

- Oct, 2022: Two papers are accepted to CoRL 2022 and IROS 2022/R-AL.

- May, 2022: Two papers are accepted to ICRA 2022.

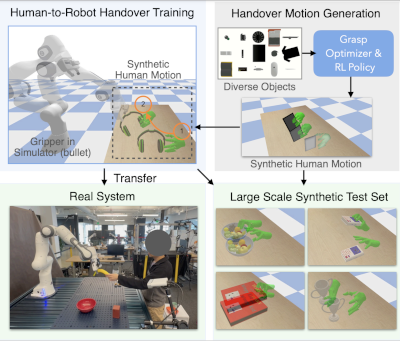

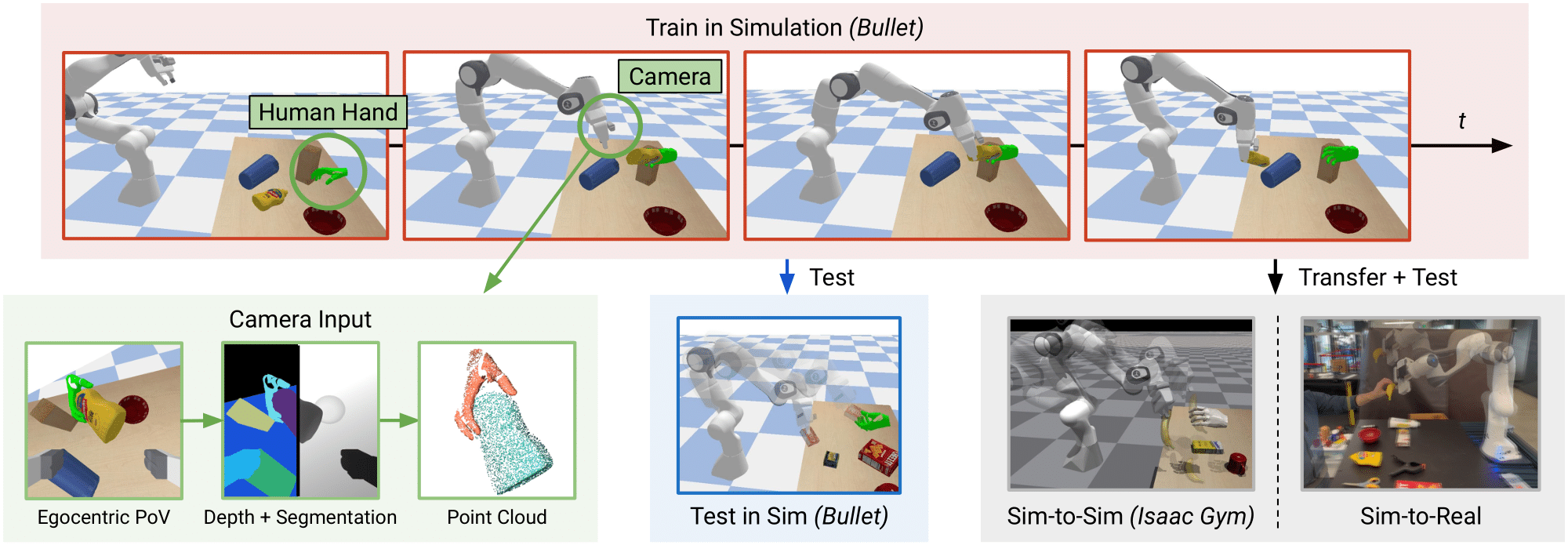

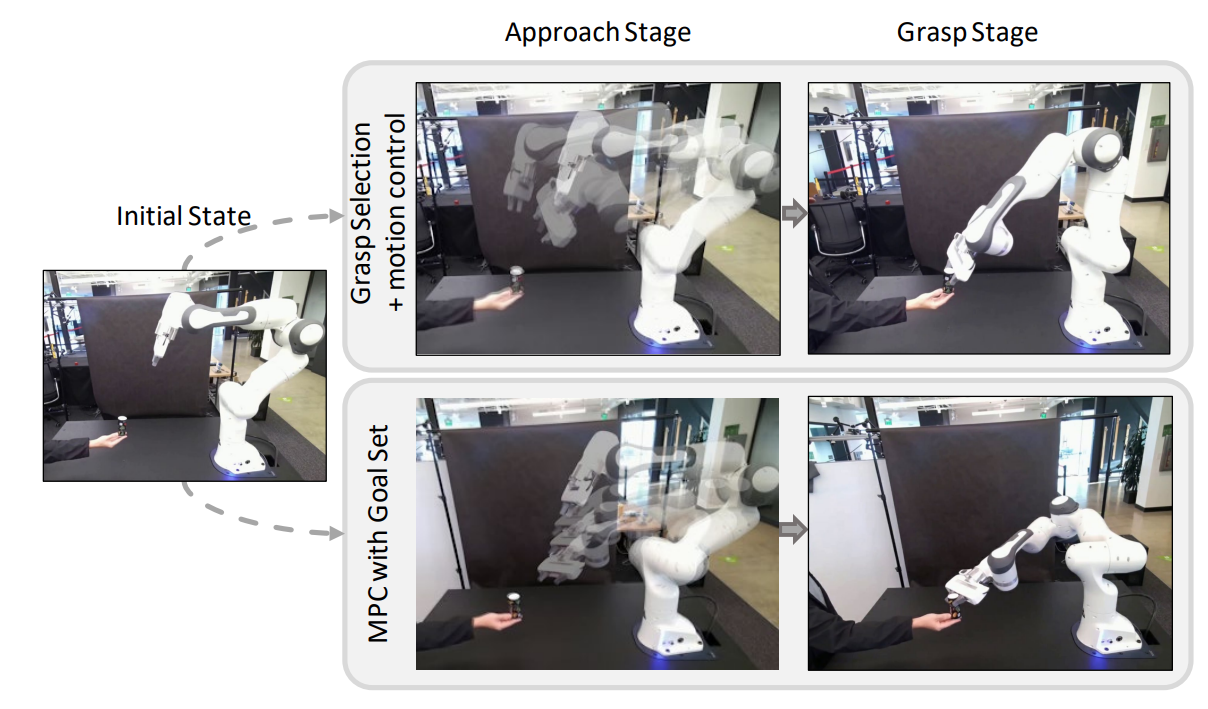

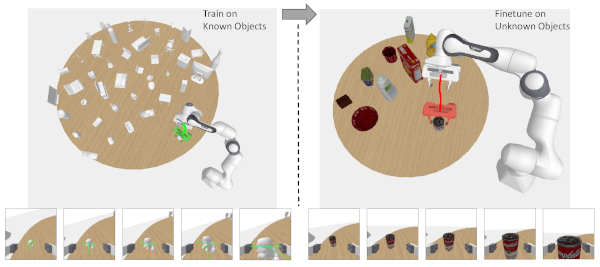

- November, 2021: Our 6D grasping (GA-DDPG) paper is accepted to CoRL 2021.

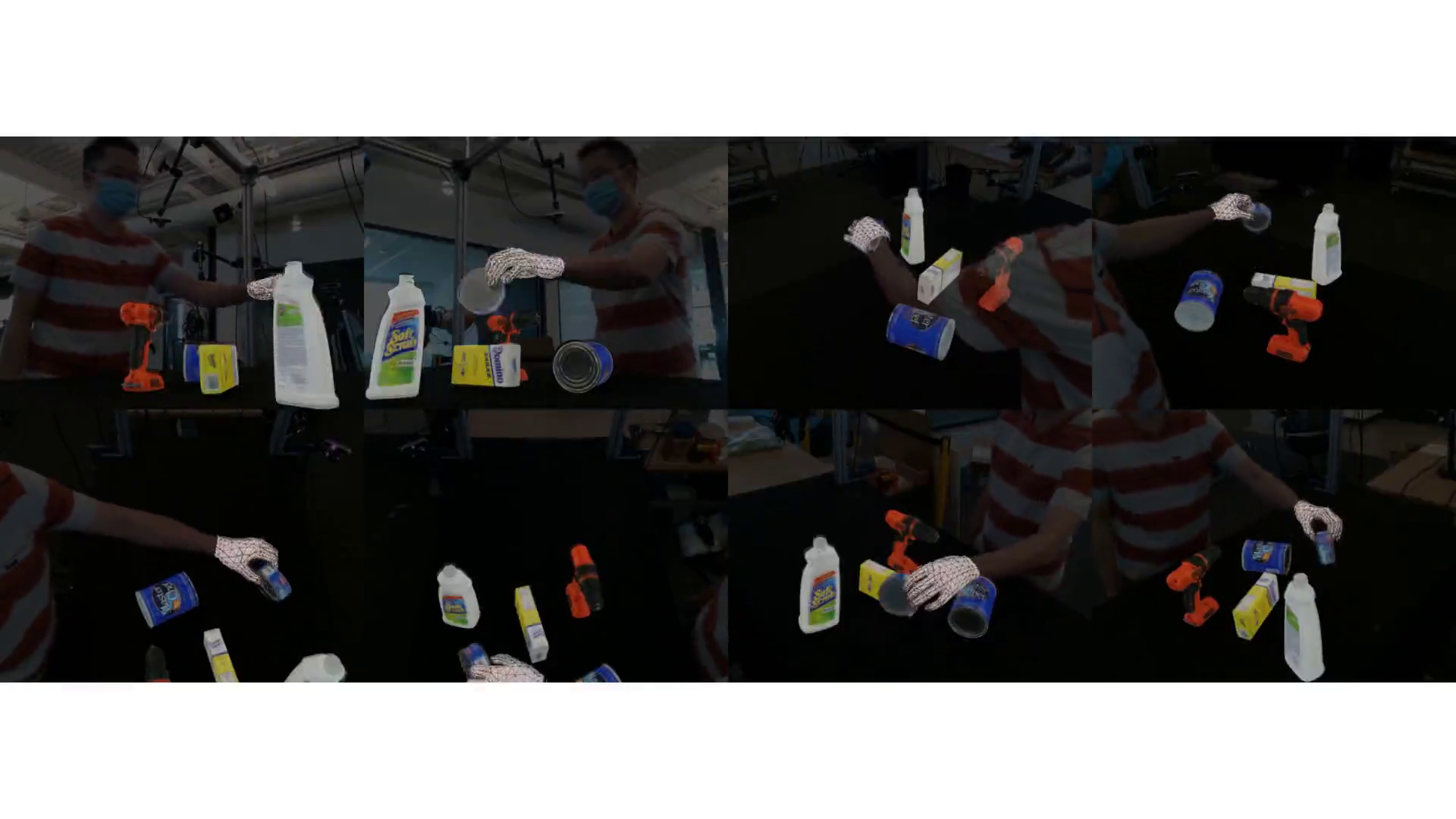

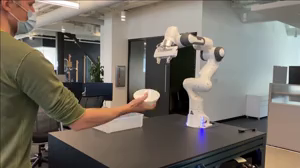

- June 2, 2021: Our H2R handover paper won the 2021 IEEE ICRA Best Paper Award on HRI.

- March 3, 2021: Our DexYCB hand grasping object benchmark is accepted to CVPR 2021.

- Feb 28, 2021: Our reactive handovers paper is accepted to ICRA 2021.

- July 1, 2020: Two papers are accepted to IROS 2020.

- Jan 21, 2020: One paper is accepted to ICRA 2020.

- Jan 7, 2019: One paper is accepted to ICLR 2019.

- Jan 2, 2019: I join the NVIDIA Seattle Robotics Lab as a Research Scientist.

- Feb 28, 2018: One paper is accepted to CVPR 2018.

- Oct 23, 2017: Our team won the 2nd places of the PoseTrack Challenge 2017 (Technical report | Leaderboard).

- Oct 15, 2017: I am currently a visiting scholar at CMU working with Prof. Abhinav Gupta (from Oct 15 2017 to April 30 2018).

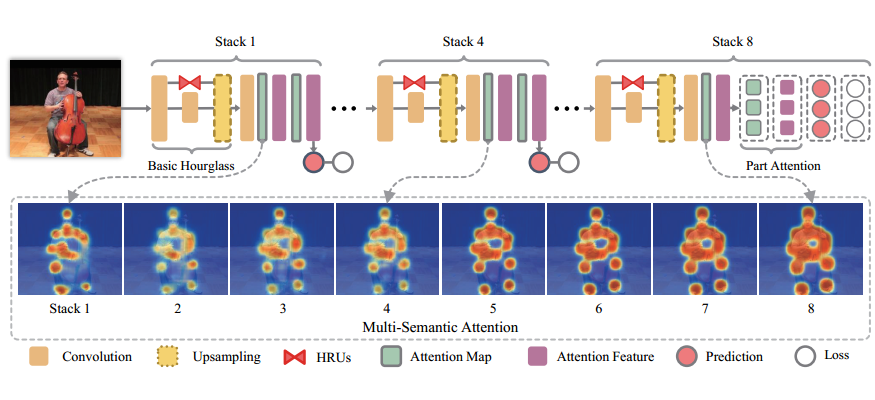

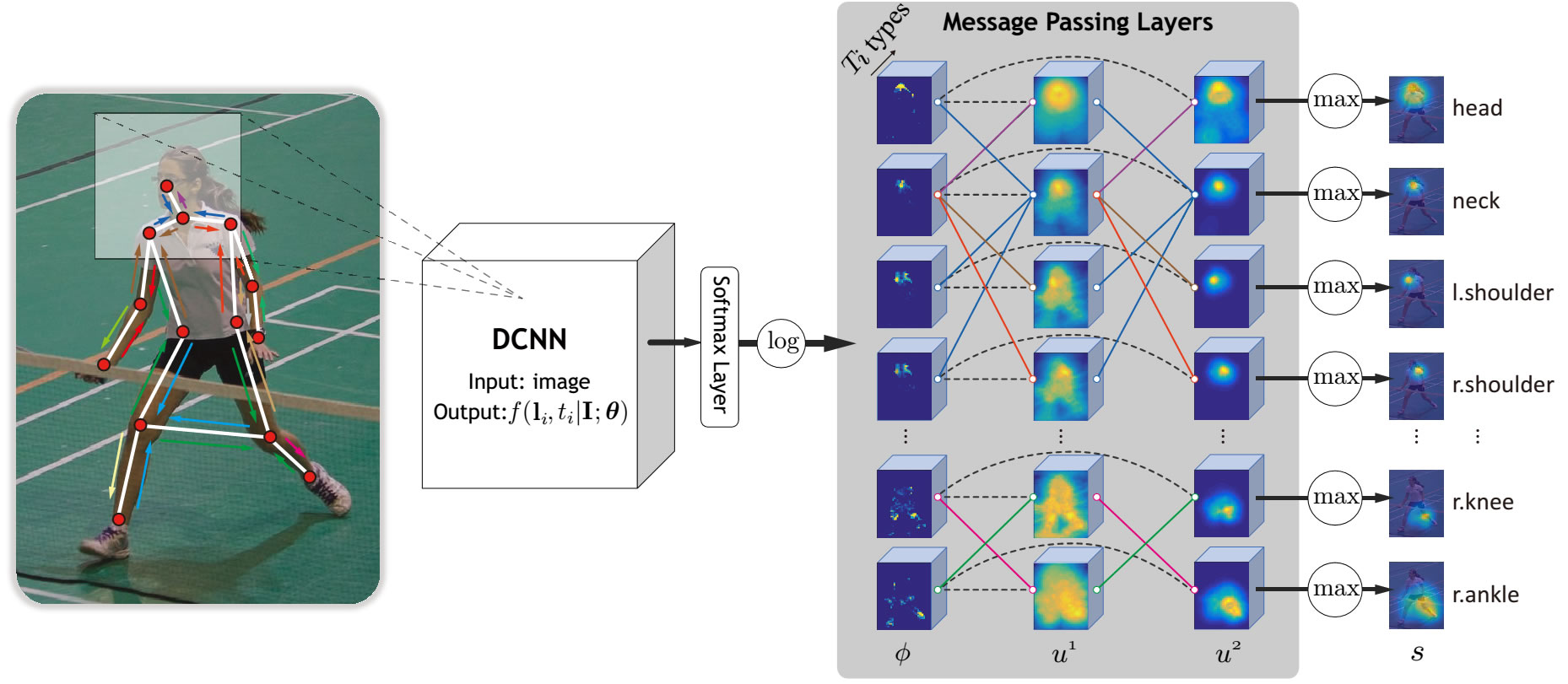

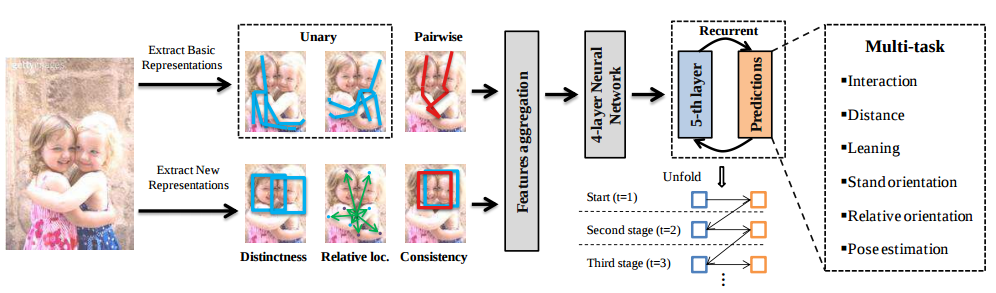

- July 17, 2017: Two papers are accepted to ICCV 2017.

- April 5, 2017: The code for our CVPR 2017 paper has been made publicly available now.

- March 18, 2017: One paper is accepted to CVPR 2017.

- June 3, 2016: I have fullfilled the candidacy requirement and will be advanced to Ph.D. (post-candidacy) stage.

Hello, I'm Wei Yang

I am a Senior Research Scientist at the NVIDIA Seattle Robotics Lab. I received my Ph.D. in Electronic Engineering from the Chinese University of Hong Kong in 2018, under the supervision of Prof. Xiaogang Wang and co-supervision of Prof. Wanli Ouyang. During my doctoral studies, I was a visiting student at the Robotics Institute, Carnegie Mellon University, where I worked with Prof. Abhinav Gupta.

Research interests: Robotics Manipulation, Computer Vision, Embodied Intelligence.